Learning objectives

In this lesson we will have a look at distance and similarity measures to analyse data. If one can determine the distance between data samples (e.g. between rows in a table, different texts or images) one has access to a range of tools to investigate data and to model it. In this lesson we will have a look at two of them, namely k-nearest neighbour classification and k-means clustering.

k-nearest neighbour classification falls in the category of supervised algorithms, which we already encountered with Decision Trees and Random Forests.

k-means clustering on the other hand is an unsupervised method and therefore forms a new class of algorithms. We will investigate how these algorithms can be used.

This notebook is split according to three learning goals:

- Understand different measures of distance and the curse of dimensionality.

- How the nearest neighbours can be used to build a classifier in scikit-learn.

- Explore a dataset with the unsupervised k-means method.

References

- Chapter 6: Similarity, Neighbors, and Clusters of Data Science for Business by F. Provost and P. Fawcett

Homework

Work through the notebook and solve the exercises.

Figure: A group of cats is called a cluster.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from tqdm import tqdm

from sklearn import datasets

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_validate

from sklearn.ensemble import RandomForestClassifier

from sklearn.cluster import KMeans

from dslectures.core import plot_classifier_boundaries, plot_fitting_graph

# !pip install --upgrade dslectures

Part 1: Similarity and distance measures

Similarity and distance measures are fundamental tools in machine learning. Often we want to know how far apart or similar two datapoints are. Some examples:

- How similar are two customers?

- How close is a search query to a webpage?

- How similar are two pictures?

- How far away is the closest restaurant?

How we measure the distance and similarity influences the results. If the direct, shortest path takes us over a cliff it might not actually be the shortest path time-wise. Also the dimensionality of the data has an impact on the usefulness of the result. When working with high-dimensional data one has to keep the curse of dimensionality in mind that impacts the quality of some metrics.

Figure: Obsolete Russian measures of distance.

See this link for more information about obsolete Russian units of measurement.

In this section we investigate how different measures of distance and similarity behave when more dimensions are added. In machine learning this means that we add more features to the feature matrix. This can have severe implications on the quality of distance measure and methods that rely on it (such as k-nearest neighbour or k-means). This is often called the curse of dimensionality.

First lets define a few distance/similarity measures. These functions calculate the distance/similarity between two vectors x and y:

def euclidean_distance(x, y):

"""calculate euclidean distance between two arrays."""

return np.linalg.norm(x-y)

def cos_similarity(x, y):

"""calculate cosine similarity between two arrays."""

cos_sim = np.dot(x, y)/(np.linalg.norm(x)*np.linalg.norm(y))

return cos_sim

def manhatten_distance(x, y):

"""calculate manhatten distance between two arrays."""

#############

# YOUR CODE #

#############

manhatten_dist= 1

return manhatten_dist

Now we create pairs of random vectors with dimensionality ranging from 1 to 10000. For each dimension, we calculate the distance between the two vectors and store them in a list:

euclidean_distances = []

cos_distances = []

manhatten_distances = []

dims = np.linspace(1, 10000, 1000).astype(int)

for dim in dims:

x = np.random.rand(dim)

y = np.random.rand(dim)

euclidean_distances.append(euclidean_distance(x, y))

cos_distances.append(cos_similarity(x, y))

manhatten_distances.append(manhatten_distance(x, y))

This distance can be plotted in a graph:

plt.plot(dims, euclidean_distances, label='euclidean')

plt.plot(dims, cos_distances, label='cosine')

plt.plot(dims, manhatten_distances, label='manhatten')

plt.ylabel('Distance')

plt.xlabel('Dimensions')

plt.legend(loc='best')

plt.yscale('log')

plt.xscale('log')

plt.grid(True,alpha=0.5)

plt.show()

Exercise 1

Implement the Manhatten-metric and add it to the scaling plot. The mathematical definition of the Manhatten-metric is:

$d_{Manhatten}(\vec{x},\vec{y})=\sum_{i=1}^{n}{\mid x_i - y_i \mid}$

Use the plot to investigate the following questions:

- How does it behave compared to the other two metrics?

- What do you observe when you plot the y-axis on a linear scale (comment the line

plt.yscale('log'))?

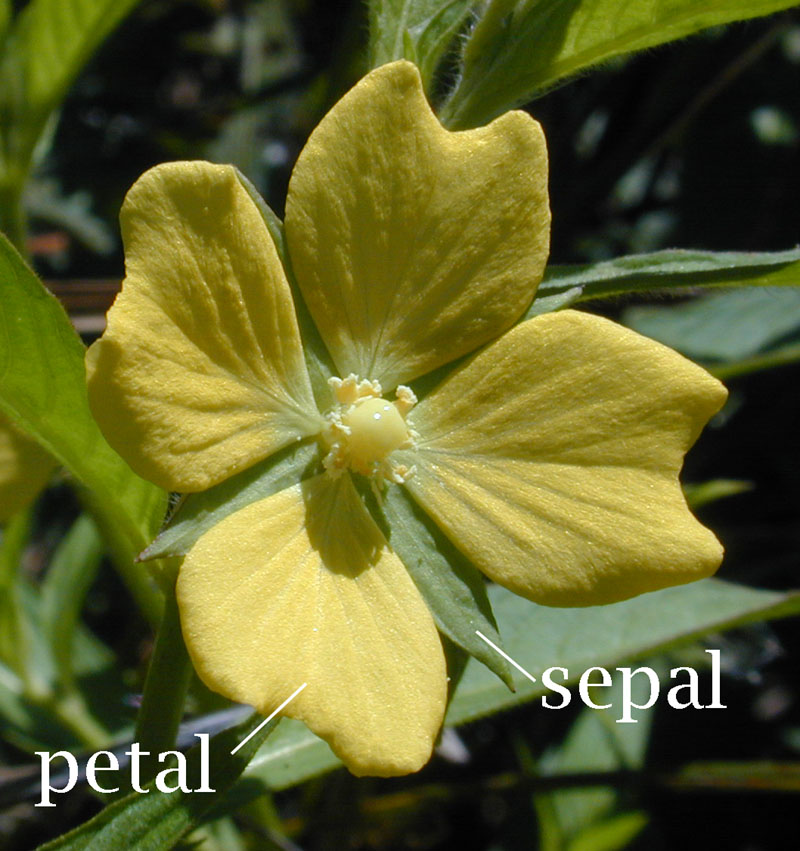

The iris dataset contains the sepal and petal width of three species of flowers. The three species are Iris setosa, Iris versicolor, and Iris virginica. It is one of the most iconic datasets in machine learning and has been around for more than 80 years! It is still widely used today because of its two features it is easy to visualise and study different algorithms on it. Going beyond 2 or 3 dimensions is very hard to visualise and we will study some methods in the next lesson to break down and visualise high dimensional data. For visualisation purposes we'll use the iris dataset in this lesson to investigate the

Figure reference: https://en.wikipedia.org/wiki/Petal#/media/File:Petal-sepal.jpg

iris = datasets.load_iris()

X = iris.data[:, :2]

y = iris.target

k-nearest neighbours classifier

The k-nearest neighbours classifier uses the neighbours of a sample to classify it. Given a new point, it searches the k samples in the training set that are closest to the new point. Then the majority class of the neighbours is used to classify the new point.

Example

Let's assume you are given a new flower and want to classify it using the iris dataset. You measure the petal and sepal width of your flower and compare it to the dataset. You decide to do a k=8 nearest neighbour search. You find that the nearest samples are:

- 5 Iris setosa

- 2 Iris versicolor

- 1 Iris virginica Based on that observation you decide that your flower is a Iris setosa.

Tips & tricks

- Make sure all features are scaled properly (e.g. see

sklearn.preprocessing.StandardScaler) - Use odd number for k to avoid ties

- Voting can be weighted by distance

Usage

The KNeighborsClassifier interface is the same as the one we have already seen for the Decision Tree and Random Forest classifiers. When initializing the classifier one has to pass the number of neighbours k and can then use the known .fit() and .predict() procedure. We introduce the plot_classifier_boundaries to study the behaviour of the classifier more closely.

plot_classifier_boundaries function only works in two dimensions.for k in [1, 7, 21, len(y)]:

clf = KNeighborsClassifier(k)

clf.fit(X, y)

plot_classifier_boundaries(X, y, clf)

plt.title(f"3-Class classification (k = {k})")

plt.show()

Excercise 2

Create the fitting graph for the kNeighborsClassifier on the iris dataset with cross-validation. Run it for the following values of k: np.linspace(1, 120, 120,).astype(int) and use 5 folds (cv=5). What is the best value for k? And how do you interpre k=1 and k=n_samples? When are you most likely to overfit the data?

Excercise 3

Use the plot_classifier_boundaries to plot the classifier boundaries with the following settings:

RandomForestClassifier(n_estimators=1, max_depth=1)RandomForestClassifier(n_estimators=1, max_depth=2)RandomForestClassifier(n_estimators=100, max_depth=None)

What do you observe? Can you explain it?

def plot_clustering_results(X, y, cluster_centers):

"""

Plot results of k-means clustering.

"""

sns.set_style("darkgrid", {"axes.facecolor": ".9"})

sns.scatterplot(X[:,0], X[:,1],

hue=y, edgecolor='k',

palette="nipy_spectral",

legend=False)

sns.scatterplot(cluster_centers[:, 0], cluster_centers[:, 1],

hue=list(range(np.shape(cluster_centers[:, 0])[0])),

palette="nipy_spectral", marker="o", edgecolor='k',

s=250, legend=False)

plt.show()

The interface of k-mean provided in scikit-learn is very similar to that of a classifier.

Initialize: We define the number of clusters we want to look for.

kmeans = KMeans(n_clusters=k)

Fit: We fit the model to the data.

kmeans.fit(X)

Predict: We make predictions to which cluster each datapoint belongs.

y = kmeans.predict(X)

Note: In contrast to the classifiers, the k-means algorithm does not need any labels when the model is fitted to the data.

In addition to these standard functions has the trained model several additional features. On the one hand can the calculated cluster centers (or sometimes called centroids) be accessed:

kmeans.cluster_centers_

Furthermore, we can get the inertia, which is the sum of the squared distances of each datapoint to its closest cluster center.

kmeans.inertia_

We can cluster the dataset in two clusters and visualize the results:

kmeans = KMeans(n_clusters=2)

kmeans.fit(X)

y_hat = kmeans.predict(X)

plot_clustering_results(X, y_hat, kmeans.cluster_centers_)

We can also have a look at other values for k:

ks = np.linspace(1,8,8).astype(int)

for k in ks:

kmeans = KMeans(n_clusters=k)

kmeans.fit(X)

y_hat = kmeans.predict(X)

plot_clustering_results(X, y_hat, kmeans.cluster_centers_)

The elbow rule

Looking at the results above it seems that the hardest part of k-means is to select the right number for k, which can be any positive integer. How can we find a good value for k?

There are several approaches, one of which is the so called elbow rule: Plot the inertia for different values of k. This yields an asymptotic curve that moves at first fast towards zero and then slows down. Imagine the curve is an arm. The spot where the elbow of that arm would be is usually a good value for k.