Learning objectives

In this lecture we will have a look at dimensionality reduction and data visualisation. When we look at the feature vector of a dataset it can contain many features and each feature corresponds to its own dimension. In the first part of the notebook we will explore a method to reduce the dimensionality of a dataset. In the second part we will look at a an algorithm to extract information from high-dimensional data and display its structure in two dimensions. Both these methods fall into the cateogory of unsupersvised algorithms. The learning objectives are to be able to answer the following questions:

- What is dimensionality reduction?

- When is dimensionality reduction useful?

References

- Chapter 8: Dimensionality Reduction, Section PCA of Hands-On Machine Learning with Scikit-Learn and TensorFlow by Aurèlien Geron

Homework

As homework read the references, work carefully through the notebook and solve the exercises. This lecture covers several complex topics and it is important that you familiarise yourself by experimenting with the notebook.

Principal component analysis

First we have a look at a method to reduce the dimensions of a dataset. There are two main reasons why we would like to reduce the dimensionality of such datasets:

- Some machine learning algorithms struggle with high dimensional data.

- It is hard to visualize high dimensional data. In practice it is hard to visualise datasets that have more than two or three dimensions.

There is one very common approach to reduce the dimensionality of data: principal component analysis (PCA). The idea is that not all axis contain the same amount of variance and that there are even combination of axis that contain most variance. PCA seeks to find new coordinate axis such that the variance along the axis is maximised and ordered (the first principal component conatains most variance).

Figure: PCA can look intimidating at the beginning.

import pandas as pd

import numpy as np

from dslectures.core import get_dataset, convert_strings_to_categories, rmse, fill_missing_values_with_median

from pathlib import Path

from tqdm import tqdm

# data viz

import matplotlib.pyplot as plt

import matplotlib as mpl

import matplotlib.image as mpimg

import seaborn as sns

sns.set(color_codes=True)

sns.set_palette(sns.color_palette("muted"))

# ml magic

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import r2_score

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.model_selection import cross_validate

#dslecture

from dslectures.core import rmse

# !pip install --upgrade dslectures

get_dataset('housing_processed.csv')

data_path = Path('../data/')

housing_data = pd.read_csv(data_path/'housing_processed.csv')

housing_data.head()

housing_data.shape

housing_data.describe()

We drop the two columns that contain categorical data with many categories. We could create extra columns for each categoriy but since there are many of them that would create a lot of extra features.

housing_data.drop(['city', 'postal_code'], axis=1, inplace=True)

Then we split the data into features and labels as usual:

X = housing_data.drop('median_house_value', axis=1)

y = housing_data['median_house_value']

feature_labels = X.columns

rf = RandomForestRegressor(n_estimators=100, max_features='sqrt', n_jobs=-1, random_state=42)

results = cross_validate(rf, X, y,

cv=5,

return_train_score=True,

scoring='neg_root_mean_squared_error')

rmse_full = -np.mean(results["test_score"])

We get an RMSE of roughly 60'000:

print(f'RMSE: {rmse_full}')

Standardisation

PCA works by finding new axes in the dataset that cover the most variance. Since variance is the average squared distance of each sample to the sample mean this depends on the scale of the feature. To illustrate this think of two columns that contain the height of the person measured in centimeter and meter. Although height described in these two columns are the same the variance will be 10'000 times larger in the centimeter column (100^2) and the standard deviation 100 times larger.

rand_values = np.random.randn(1000)

rand_values.var()

(100*rand_values).var()

For this reason it is important to scale the features such that they are comparable to each other. A common approach to do this is to transform the data such that the mean is zero and the standard deviation is one. The following formula achieves this:

$x_{std}=\frac{x_{old}-\mu}{\sigma}$

Scikit-learn provides a function to do this for us called StandardScaler. We can do this in one line with the function .fit_transform(). Similar to the function fit_predict() it combines the process of fitting which means calculating the mean and standard deviation of the dataset and then transforming the data by applying the above described formula.

X_std = StandardScaler().fit_transform(X)

X_std.shape

We can see that the mean is zero (or close enough) and the standard deviation is one:

X_std.mean(axis=0)

X_std.std(axis=0)

Now we apply principal component analysis to the standardised features. After initialising a PCA object we can use the .fit_transform() to find the principal components and then transform the dataset into these new coordinates.

fit_transform the test set. Use it on the training set and apply the mean and standard deviation with transform to the test set. This is true for all data preprocessing.pca = PCA()

X_pca = pca.fit_transform(X_std)

We can see that the transformed dataset still has the same shape. However the columns are no longer the features but the coordinates in the new principal component coordinates.

X_pca.shape

Explained variance

The explained variance is an important concept in principal component analysis. It tells us how much variance of the whole dataset is contained along one principal component axis. The component with the more variation can encode more information than features with little variation. The explained variance is stored in the pca object and we can use it to visualise the distribution. The explained_variance_ratio_ tells us the percentage of variance each component contains from the total variance.

pca.explained_variance_ratio_

pca_labels = [f'principal component {i+1}' for i in range(len(pca.explained_variance_ratio_))]

barplot = sns.barplot(x=pca_labels, y=pca.explained_variance_ratio_)

barplot.set_xticklabels(pca_labels, rotation=90)

plt.title('explained variance ratio')

plt.show()

We can see that already the first three components together account for 50% of the total variance in the dataset. We can visualise this more systematically by plotting the cumulative sum of the explained variance rations:

sns.lineplot(np.arange(len(pca.explained_variance_ratio_)), np.cumsum(pca.explained_variance_ratio_))

plt.ylabel('total explained variance')

plt.xlabel('number of included PCA components')

plt.show()

We see that with 7 of the 17 axes we already explain 90% percent of the variance in the dataset and that after 10 we almost reach 100%. That means the components 10-17 probably don't contain much information and can be discarded.

PCA vector composition

Naturally, we would like to know what these principal components mean. For example the first component contains 25% of the total variance. What information is stored in that component? The pca object also contains the components_ which define the transformation between the original dataset and the transformed one. We can print a few of the components and interpret them:

def print_tabular(labels, values):

df = pd.DataFrame(data=values, columns=labels)

display(df.T)

print('The first three principal components:')

print_tabular(feature_labels, pca.components_[:3])

The way to read this table is to think of it as a recipe to construct the first three principal components coordinates from the original features. Given a new datapoint we can for example calculate the first principal component by multiplying the longitude by the value in column 0 plus the latitude multiplied by the corresponding value in column 0 etc.. This weighted sum of the original featuers will yield the first coordinate in the PCA coordinate system.

${x_{PC,i}} = \sum_{i=0}^{n-1} w_{i,j} \cdot x_{feat, j}$

From this we can see that the first component mainly consists of the three features total_rooms, total_bedrooms, and households:

$x_{PC,0} = 0.096 \cdot longitude + (-0.093) \cdot lattidue + (-0.229) \cdot housing\_median\_value + \dots$

sns.scatterplot(x=X_pca[:,0], y=X_pca[:,1], alpha=0.1)

plt.show()

jplot = sns.jointplot(x=X_pca[:,0], y=X_pca[:,1], kind="hex", color="#4CB391",xlim=[-5,10], ylim=[-5,10])

jplot.set_axis_labels('Principal component 1', 'Principal component 2')

We observe that there seem to be two visible clusters along these two axis. We could now investigate these clusters further and might find some property of the dataset that might be useful to us or our customer. Let's do it!

plt.hist(X_pca[:,1], bins=np.linspace(-5, 5, 50))

plt.show()

We can see that the clusters seem to be seperated around -0.5. We can create a mask for the data points that are below that threshold.

left_group = X_pca[:,1]<-0.5

Furthermore, we only want to investigate the features that contribute to the second principal component. Therefore, we set another threshold for the minimum absolute feature coefficient.

pc1_features = list(feature_labels[np.abs(pca.components_[1])>0.2])

These are the components that mainly contribute to the second principal component:

pc1_features

Unfortunately, the StandardScaler transformed the DataFrame X into an array X_std. Let's transform it to a DataFrame again:

X_std = pd.DataFrame(data=X_std, columns=feature_labels)

With loc and the mask we just created we can now slice out the datapoints of the left and right group. We have a look at the statistics of the relevant features of the two groups with .describe().

X_std.loc[left_group, pc1_features].describe()

X_std.loc[~left_group, pc1_features].describe()

~ operator creates the boolean complement. So True becomes False and vice versa.Just looking at the feature mean of the two groups we see that there seems to be a significant difference between almost all features. To have a more detailed view let's compare the histograms of each feature:

for col in pc1_features:

bins = np.linspace(-3, 3, 20)

fig, ax = plt.subplots(1,1, figsize=(10,5))

X_std.loc[left_group, col].hist(ax=ax, bins=bins, color='C0', alpha=0.5, label='Group 1')

X_std.loc[~left_group, col].hist(ax=ax, bins=bins, color='C1', alpha=0.5, label='Group 2')

plt.legend(loc='best')

plt.title(col)

plt.show()

We can see that the most significant difference is between the longitute and latitude. Another interesting observation is that in the first group (blue) the rooms per household seem to be slightly lower and the bedrooms per room slightly higher. We will discuss further below. First we want to look at the longitude and latitude distribution of the two groups. First we make a hexplot to see the hotspots.

import matplotlib.gridspec as gridspec

print("Group 1")

sns.jointplot(

kind='hex',

x="longitude",

y="latitude",

cmap='magma',

data=X.loc[left_group],

)

plt.show()

print("Group 2")

sns.jointplot(

kind='hex',

x="longitude",

y="latitude",

cmap='magma',

data=X.loc[~left_group],

)

plt.show()

We see that in the first group there are mainly two hotspots while in the second group the hotspots seem to be further distributed. Recall that we can plot the longitude and latitude on top of a map. Let's investigate what these hot regions correspond to.

california_img=mpimg.imread('images/california.png')

fig, axes = plt.subplots(1, 2, figsize=(12,6))

fig = sns.scatterplot(

x="longitude",

y="latitude",

data=X.loc[left_group],

alpha=0.1,

s=1,

color="r",

edgecolor="none",

ax=axes[0]

)

axes[0].imshow(california_img, extent=[-124.55, -113.80, 32.45, 42.05], alpha=0.5)

axes[0].set_title("Group 1")

fig = sns.scatterplot(

x="longitude",

y="latitude",

data=X.loc[~left_group],

alpha=0.05,

s=3,

color="r",

edgecolor="none",

ax=axes[1]

)

axes[1].imshow(california_img, extent=[-124.55, -113.80, 32.45, 42.05], alpha=0.5)

axes[1].set_title("Group 2")

plt.show()

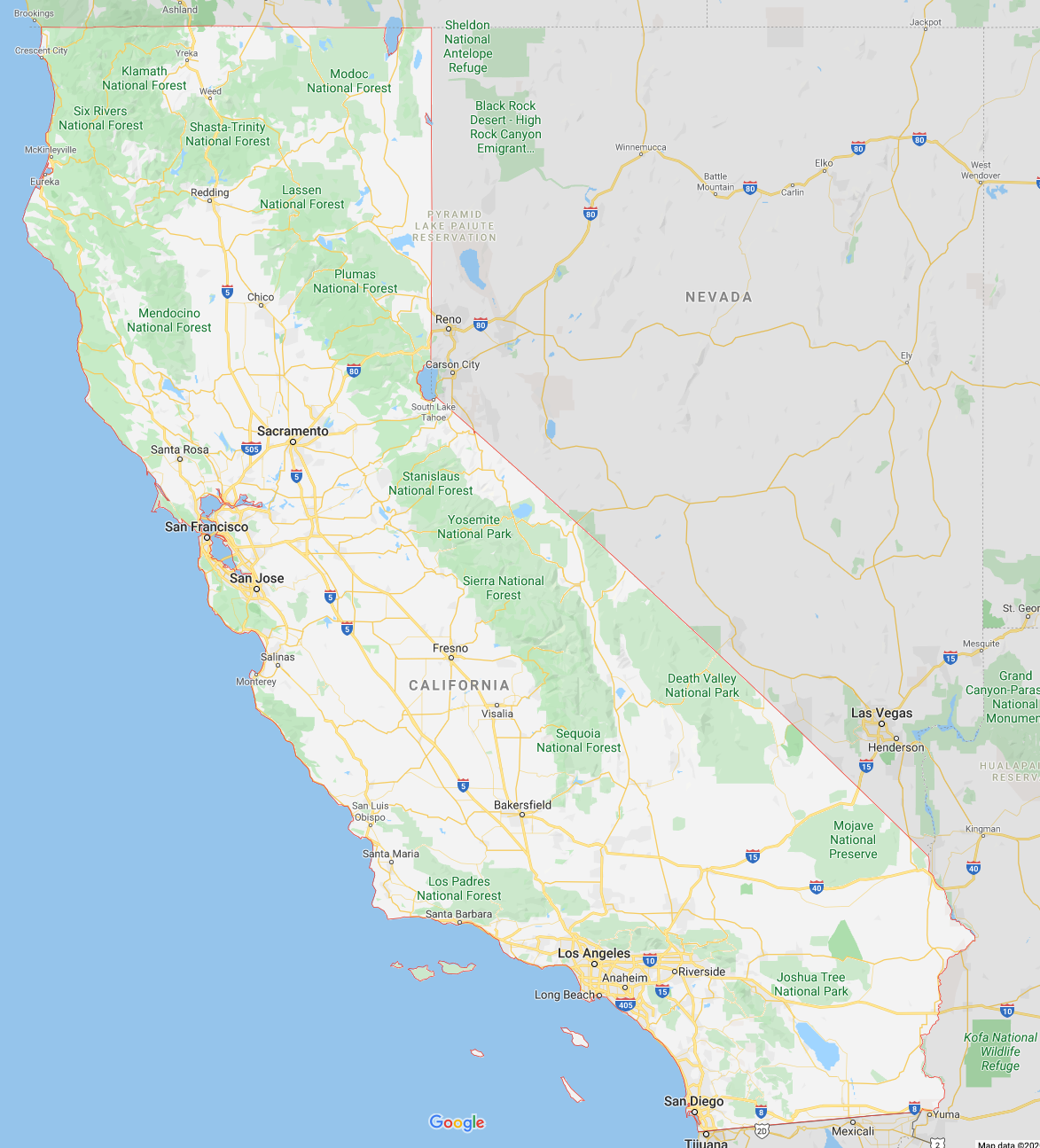

Comparing to a satellite image of California (see below) we see that the two hotspots in the first group correspond to Los Angeles and San Diego. The second group mainly includes San Francisco, the Silicon Valley, Sacramento, Fresno and Bakersfield. What is interesting to observe is that the second group also includes Riverside which is just next to Los Angeles. That means that the second principal component is not just the diagonal along the coast but specifically encodes Los Angeles and San Diege. If one wanted to build a classifier to determine whether a house is in Los Angeles or an other region of California one would only need to look at the second principle component of the dataset.

Circling back the observation about the rooms per households. It seems that in Los Angeles the appartments have slightly fewer rooms than the rest of California but a similar amount of bedrooms, hence the higher number of bedrooms per rooms. In other words in Los Angeles you would get less extra rooms per household.

Figure: Satellite image of California from Google Maps.

Finally, we can also look at the median value of the houses in the two groups:

bins=50

fig, ax = plt.subplots(1,1, figsize=(10,5))

y.loc[left_group].hist(ax=ax, bins=bins, color='C0', alpha=0.5, label='Group 1')

y.loc[~left_group].hist(ax=ax, bins=bins, color='C1', alpha=0.5, label='Group 2')

plt.legend(loc='best')

plt.title('median value')

plt.show()

One observes that the median value is higher for the first group (Los Angeles & San Diego). However, the long tail in both groups is quite similar. So living seems to be more expensive for low and medium income people while for the upper class the prices seem to be comparable.

rmse_valid =[]

for i in tqdm(range(1, 18)):

rf = RandomForestRegressor(n_estimators=100, max_features='sqrt', n_jobs=-1, random_state=42)

results = cross_validate(rf, X_pca[:, :i], y, cv=5,

return_train_score=True,

scoring='neg_root_mean_squared_error')

rmse_valid.append(-np.mean(results["test_score"]))

As an additional baseline we also create a median regressor:

rmse_median = rmse(y, [np.median(y)]*len(y))

Now we can plot the performances with the full Random Forest and the median regresssor as baselines for all subsets of components.

lineplot = sns.lineplot(x=np.arange(len(rmse_valid))+1 , y=rmse_valid, label='pca')

lineplot.axhline(rmse_median, c='y', linestyle='--', label='median')

lineplot.axhline(rmse_full, c='r', linestyle='--', label='full model')

plt.legend(loc='best')

plt.xlabel('number of PCA components')

plt.ylabel('RMSE');

So we can see that already with 4-7 principal components we get results that are quite close to the full model. As mentioned previously this has two advantages:

- Visualising 4 components is still hard but feasable compared to 17

- Training a Random Forest with half the features is twice as fast for training and inference.

Looking at the graph we see that there are several steep steps. It seems that adding these components improved the performance significantly. By taking the components with the biggest steps we can try to build a better selection of features:

features = [0,1,3,6,10]

rf = RandomForestRegressor(n_estimators=100, max_features='sqrt', n_jobs=-1, random_state=42)

results = cross_validate(rf, X_pca[:, features], y, cv=5,

return_train_score=True,

scoring='neg_root_mean_squared_error')

rmse_pca = -np.mean(results["test_score"])

rmse_pca

Just with 5 of 17 features we get <10% of the model that used all features. This model is 3-4 times faster to train and to make predictions. In practice there are more systematic ways of finding the best subset of features such as forward and backward selection (see link).

import pandas as pd

df = pd.DataFrame({'genre': [['a','b'], ['c'], []],

'artist': ['a1', 'a2', 'a3']})

df

df.explode('genre')

df.explode('genre').fillna('unk')

df['exists']=1

df.explode('genre').fillna('unk').pivot(index='artist',columns='genre',values='exists').fillna(0).reset_index()